Substack colleague Sergei Polevikov recently did a deep dive into Hypocritical AI. (Part One; Part Two.) That inspired me to take a look at the company. Here is my shallow dive.

On January 9, 2025, Hippocratic AI announced a $141 million Series B venture round led by Kleiner Perkins. Andreessen Horowitz, General Catalyst, NVIDIA, Premji, SV Angel, Universal Health Services (UHS), and WellSpan Health participated in the round. At a pre-money valuation of $1.5 billion, Hippocratic AI is the first new unicorn of 2025. From stealth to unicorn in 21 months is a speed record if anyone keeps track of such things.

Hippocratic AI markets what it calls “safety-focused GenAI for healthcare.” Their solution includes a proprietary suite of large language models (collectively branded as Polaris), a hidden policy layer, and a suite of bots that support customer-facing tasks.

Polaris 3.0 is a “constellation” of 22 LLMs with 4.2 trillion parameters. Hippocratic says it trains models on proprietary data, clinical care plans, healthcare regulatory documents, medical manuals, and other medical reasoning documents. The company provides some information about its assessment approach here; they say they use a large panel of doctors and nurses to simulate patient interactions and evaluate the model’s response.

Conspicuously missing from the panel: real patients.

Hippocratic claims Polaris performs on par with human nurses in medical safety, clinical readiness, patient education, conversational quality, and bedside manner. That may be true. Or not. We cannot trust vendors to benchmark their own products; independent benchmarks are impossible with a proprietary model like Polaris.

I’m unsure why VCs think investing in a proprietary LLM is smart. Training and inference costs are declining, and LLMs are ubiquitous. Most of the value, and all of the moat, is at the top of the stack.

Hippocratic bots are “warm, caring, and empathetic.” They are mostly female, and they wear blue scrubs. They have diverse skin tones and hair coloring. They talk to you and ask questions about your chronic kidney disease, your gout flare-up, your infant car seats, your menopause, your erectile dysfunction, and so forth. Hippocratic hosts a library of more than 200 bots.

For example, Ava does daily check-ins for an elderly patient. Hippocratic describes Ava as “a beacon of warmth and understanding, making her patients feel valued and well-attended to.” I’ll bet they do. Over Thanksgiving dinner, Grandma gushes about her new friend Ava at the clinic.

Then there is Amber, who does Colorectal Screening Outreach. “Amber radiates care and understanding, making patients feel heard and valued. Her comforting tone and patient demeanor instill a sense of calm, especially appreciated by those with ongoing health concerns. Amber's thoughtful explanations and willingness to answer questions are always appreciated, making her a cherished point of contact.”

The jokes write themselves.

What is Hippocratic’s value proposition? That’s a nice way to ask, “Where’s the money?” The answer is not obvious.

Take a hard look at Hippocratic’s website. You see glowing endorsements from “healthcare leaders.” There are logos from healthcare systems, media, and investors.

What’s missing? I’ll give you a hint: it starts with “C.”

Hippocratic carefully labels the healthcare systems as “partners” who “are helping us ensure our AI is safe.” That means they aren’t paying money to use this shit.

There’s no evidence that Hippocratic AI delivers any value at all right now. That’s not unusual for a startup seeking to develop something new. It’s uncommon for a Series B unicorn.

Olive AI played the same game. They gave away their product, then bragged about all the hospital chains who used it. Marketing a free product is simple; it’s remarkable how easy it is to build adoption when users don’t have to pay.

The trouble starts when investors ask, “Where’s the money?”

Technology creates value in two different ways. It can reduce costs by accelerating existing tasks or introducing new processes. Sometimes, it does both.

In healthcare, surgical robots are a good example of the first kind of value creation. A surgical robot accelerates surgery by performing basic tasks faster and better than surgical assistants.

Magnetic Resonance Imaging (MRI) exemplifies the second kind of value creation. MRI machines opened up new diagnostic capabilities; they did not replace existing processes.

Hippocratic AI positions its technology as a tool to cut the cost of existing processes. The company aims to “close the worldwide healthcare staffing shortage gap.” They claim a global shortage of 15 million healthcare workers, citing this analysis of the World Health Organization’s National Health Workforce Accounts.

That’s a wee bit of a stretch. Read the report. Most of the gaps identified in the WHO data are in developing nations, and the most pressing need is for more midwives.

Can you see it? Dr. Ussene Isse, Minister of Health in Mozambique, meets with health workers in Quelimane, the provincial capital of Zambezia. He listens to concerns about malnutrition, malaria, and cholera, then motions for silence.

“Thank you, my friends,” he intones, “I hear your concerns.”

“What does the Ministry propose?” asks Dr. Caetano, Director of Health for the province.

Dr. Isse nods, gazes briefly towards the sky, then intones: “Mozambique needs chatbots.”

The gathered crowd erupts in applause. The Economist publishes a glowing report about Mozambique’s progressive Ministry of Health.

Let’s focus on first-world problems. The Bureau of Health Workforce (BHW) reports a shortage of about 2 million healthcare workers in the USA. Registered nurses account for about half that gap; other occupations in short supply include licensed practical nurses, family medical physicians, adult psychiatrists, and internal medicine physicians.

We could eliminate the nursing shortage tomorrow by improving pay and working conditions. That’s not what Hippocratic proposes. Their implicit value proposition: cut costs. Use our bots so you don’t need to pay people to do these jobs.

That strategy only works as a cost-cutting measure if scarce workers do these jobs today. But do they? I’m not so sure. Time and motion studies show that registered nurses spend relatively little time on patient education – the task that Hippocratic’s bots do best.

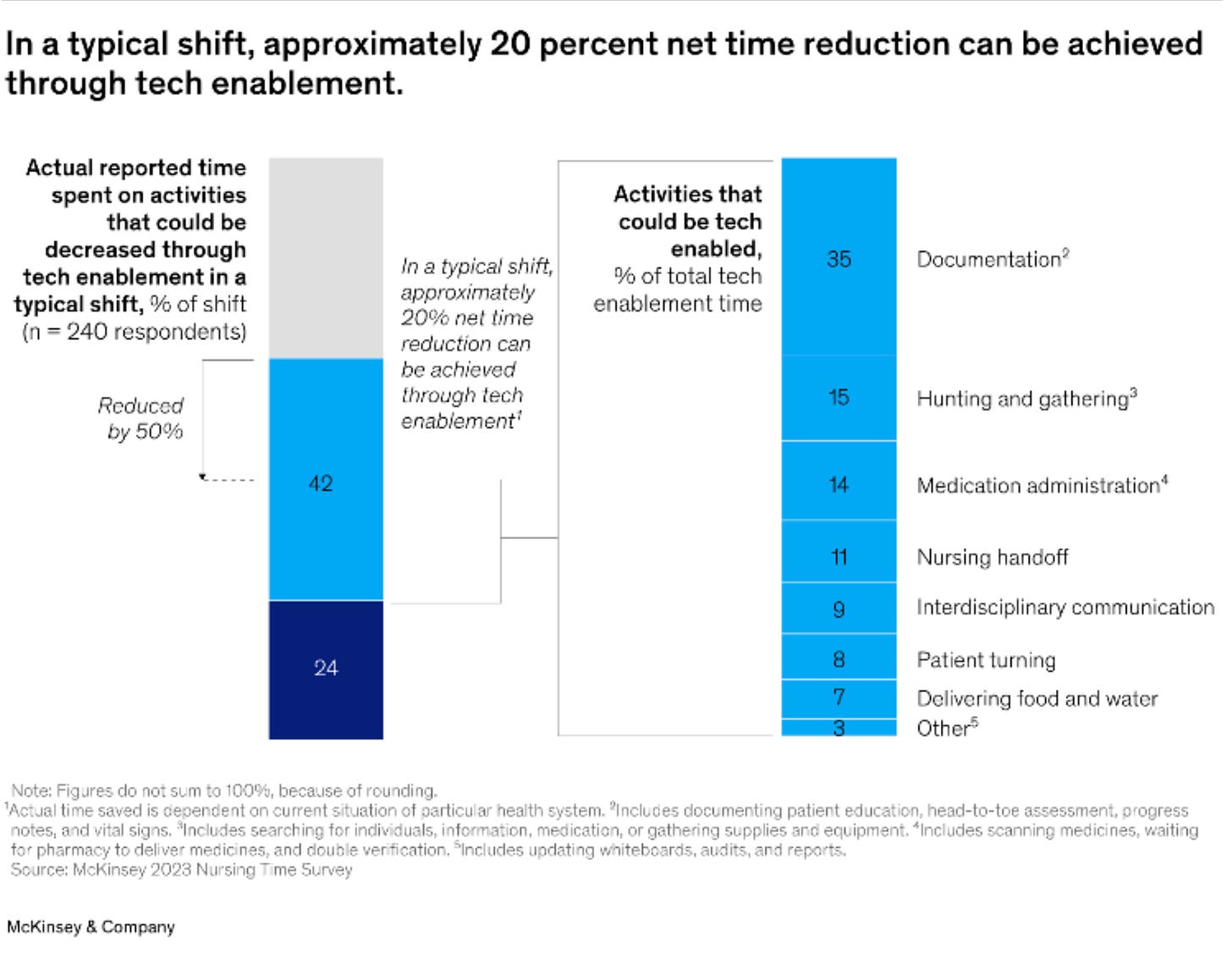

McKinsey published a study on nursing workloads. Their analysis showed that updating medical records is the biggest time sink for RNs; McKinsey did not identify patient education as a promising area for automation. On the contrary – registered nurses want to spend more time with patients, not less.

McKinsey identifies tech enablers with the highest potential to reduce nursing workloads. They include robotic delivery vehicles and pill-pickers, tools to monitor patients continuously, and ambient intelligence integrated with EHR. They do not mention bots for fake patient interactions.

We won’t replace Licensed Practical Nurses with bots, either. LPNs spend most of their time performing physical tasks in the real world:

Licensed practical nurses perform a variety of tasks under the direction of a physician, including basic bedside care and monitoring patients’ vital signs. They are also responsible for drawing blood and performing routine laboratory tests.

Practical nursing duties, depending on the work setting, can also involve direct patient care, including:

Wound care

Insertion and removal of urinary catheters

Starting IVs for adult patients

Feeding and caring for infants (as a pediatric practical nurse)

Administering medication

Monitoring of medications and treatments (for any adverse reactions)

Hippocratic doesn’t claim that its bots can make medical diagnoses. They won’t replace medical doctors, so don’t go there.

Research shows that supportive follow-up calls to suicidal patients from providers reduce subsequent suicide attempts. Follow-up calls work because they send a message to the patient: your life matters; we care about you.

A few years ago, my son felt depressed. The medical team at his HMO prescribed antidepressants. He took them for two months, then stopped.

The HMO had his pharmacy records. They also had information about his gun ownership, which he disclosed during an annual checkup. If they had checked, they would have known that he was in a high-risk group for suicide and that he had stopped taking his prescribed medication.

They did not check their records, and they did not call. He killed himself with one of his handguns.

I asked why they didn’t check in on him. They just shrugged. Not part of their standard of care.

Could his medical team have prevented that suicide? There’s no way to know; suicide is complicated, and I’m not sharing the story to point fingers or troll for condolences. Healthcare workers carry massive caseloads. The story's point: even with clear evidence supporting an outreach program, the HMO doesn’t have one. It’s not part of their “standard of care.”

Suppose you are a Hippocratic AI sales rep. You visit the HMO’s CEO and tell her she can save money by using your bots for outreach to suicide patients.

“We don’t do that around here, bro,” she will laugh as she throws you out of her office.

Can Hippocratic bots improve patient outcomes? Suppose a healthcare provider uses bots to do things they want to do but can’t because it’s too expensive.

For example, make follow-up contacts with suicidal patients.

Hippocratic AI will surely plant some academic “research” that says patients respond to bots as well as they respond to human contacts. It’s not difficult to find an academic willing to give them the “science” they want; academics desperately need to bring in hard money.

Think about that for a moment. Use your common sense. Follow-up contacts reduce suicide risk by sending a message: you matter, and we care about you. When you assign bots to that task, you send a very different message: we are too busy and important to spend time on you, a mere patient. We don’t care about you. Talk to the bot. “Talk to the bot” means the same thing as “talk to my hand.”

There is “research” that says a telehealth visit is just as good as an office visit or that a physician assistant is just as good as a physician. Those claims may or may not be true, but we can’t take them at face value because everyone knows the purveyors have an axe to grind.

“You will love our bots,” said no concierge medical practice ever.

There is a role for AI in healthcare. Many healthcare systems already use AI to predict patient risk for various conditions; these models reduce rehospitalization and directly affect hospital finances. AI helps radiologists interpret MRIs, CAT scans, and other medical images. Generative AI can summarize a patient’s medical records or provide decision support for medical diagnostics.

Suppose my son’s HMO had an AI engine running in the background that could flag his case for special attention. They had all the data they needed, but the medical team was so buried in casework they couldn’t take the time to check. A prioritization system like that would add a thousand times more value than an army of patient-facing bots.

AI systems that accelerate background functions don’t need a fake human interface. Anyone with a medical or a nursing degree can handle a prompt. All of the effort that Hippocratic puts into creating “empathic” fake talking heads is wasted. Nobody wants that shit. Healthcare professionals don’t need it, and patients don’t want it.

Seriously, I hope this company fails miserably.

So good Thomas! Humorous writing and spot on.

Well argued, thoughtful piece.